Journal Prestige Index: Expanding the Horizons of Assessment of Research Impact

By Muhammed Mubarak1, Shabana Seemee2Affiliations

doi: 10.29271/jcpsp.2021.11.1261An assessment of the impact of research and its quality in academic field is a formidable and controversial task. At the same time, it has huge implications for the concerned stakeholders. There is still no single universally agreed upon tool by which global assessment of scholarly or academic work is assessed. The most tangible product of research work is the publication or sharing of the results of that work, often, in the form of an article in a scholarly journal. A variety of research metrics have been used over years to assess the quality of journals, based primarily on the citation of published papers.1-4

The oldest and still dominating research metric in use is the Journal Impact Factor (JIF), published annually in the form of Journal Citation Reports (JCR). JIF was originally developed for the use by the librarians for selecting journals for inclusion in Science Citation Index (SCI) list of journals for libraries. However, its use and misuse increased greatly over the following decades. The popularity and hegemony of JIF were un-paralleled till recent past, when some other competitors have made their debut in the field, such as CiteScore by Scopus.5 It should be noted that both JIF and CiteScore gauge the prestige or importance of a journal and not a researcher or a research paper. There are several caveats and biases in using these single number metrics.6

In the backdrop of the above facts, a new research metric for a transparent, fair and comprehensive assessment of research impact was the need of the hour. Of late, Higher Education Commission (HEC) of Pakistan has taken a bold initiative in this regard and developed a new combinatorial, proprietary and derived metric known as journal prestige index (JPI), which takes into account six well established, publicly available, and most influential citation-related parameters for its calculation.7 These were chosen from a list of 29 different citation and usage metrics.8 Both, raw scores and percentiles, are used to give equal weightage to all six factors.

HEC has developed an information technology (IT)-based new journal recognition and categorisation portal, known as HEC journal recognition system (HJRS).7 The new system was launched in 2019 and has come into effect from 1st July, 2020. All journals, whether local or international, are eligible if they are indexed in either one or both of Web of Science (WoS) and Scopus databases. For local journals, they have also made a provision of eligibility for the time being (subject to approval of a committee) even if they are not indexed with above two main international indexing agencies. HEC has retained the previous W, X and Y categories, W being the highest and Y being the lowest. Z category has been eliminated. However, new and objective thresholds have been designed for these categories, which are applicable to all scholarly journals, whether local or international. There is no discrimination between local and international journals in assigning different categories. The category thresholds are relative, based on JPI scores; and the cut-off values vary from one subject area to another. The thresholds are decided by respective Scientific Review Panels and may be changed over time. It is well known that all journal rankings or categorisations are labile and subject to downgradation or upgradation. The change is more likely in journals, which lie near the thresholds of different categories.

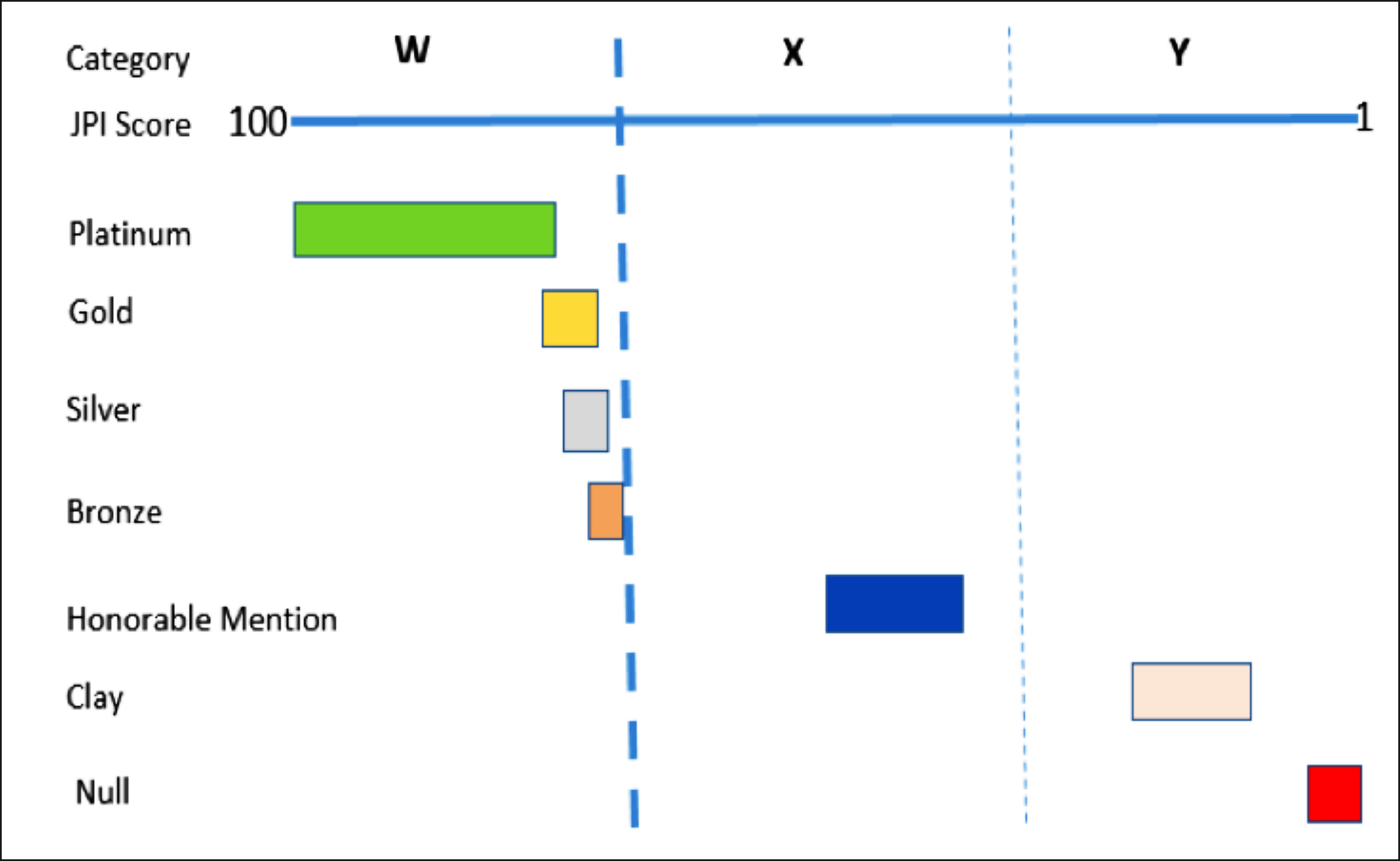

The authors should be able to predict the stability of the position of a journal in a given category in future, before selecting the journals for publication. HEC also addressed this point in HJRS by introducing seven medallions including Platinum, Gold, Silver, Bronze, Honorable Mention, Clay and Null. As alluded to earlier, all journal metrics and rankings are subject to change and are revised at regular intervals. The purpose of medallions is to provide an easy reference to predictive assessment of future stability of journal category based on the distance from the relative threshold selected for W category for a given year (Figure 1). For example, journals with Platinum medallion have negligible probability of losing W category at any time in the future. On the other hand, Bronze journals are very close to the relative chosen threshold and at a significant risk of losing W category at any time in the future. In other words, they are unstable journals. Thus, the medallions represent a quick visual guide in making informed judgement to select a journal for publication.

The HJRS represents a significant improvement in objective, automated, unbiased and comprehensive assessment of quality and categorisation of scholarly journals. It has markedly broadened the journal base available to researchers for publishing their work. It uses six different metrics from different sources to derive JPI, which increases the credibility of this multi-parametric metric. It is more representative of the research impact of a journal. Both local and international journals are judged by the same yardstick. The thresholds of categories vary according to subject area, being higher in some areas, which is a positive point, but needs further improvement. Medallions are ready visual reference to the stability of category of a journal in future.

Figure 1: Schematic diagram showing the three categories, journal prestige index (JPI), and various medallions. Dotted vertical lines represent thresholds of different categories. Bold dotted line represents the threshold of W category. Medallions are assigned based on the distance of journals from this threshold to W category. Sizes and positions of medallions relative to threshold line of W category are only approximate.

Figure 1: Schematic diagram showing the three categories, journal prestige index (JPI), and various medallions. Dotted vertical lines represent thresholds of different categories. Bold dotted line represents the threshold of W category. Medallions are assigned based on the distance of journals from this threshold to W category. Sizes and positions of medallions relative to threshold line of W category are only approximate.

There are certain limitations in the new system. Medline-indexed journals have not been included in HJRS. One possible reason might be that Medline does not have assessment metrics. HEC also needs to reduce the threshold levels further for some categories, for example, medical field, where clinicians are primarily non-researchers, and perform research as a paraclinical activity. By this new system, many of the local journals, which were recognised by the previous Pakistan Medical and Dental Council (PMDC), now Pakistan Medical Commission (PMC), and previously were in W category, have been moved to lower categories. The arbitrary bar upon which the new system assigns categories to different journals may be revisited. The Pakistani context should be kept upfront. Setting a higher cut-off may discourage the national journals as they have limitations because the manuscripts submitted to them by Pakistani researchers are usually of limited interest to the readers. This is reflected in reduced number of articles from Pakistan in impact factor (IF) national journals. As HEC grants research funds, based upon IF as well as citation score; thus, Pakistani researchers are discouraged as they do not qualify for funding.

Although, overall, HJRS represents a bold step in right direction for academic journal recognition and ranking, but national context must be considered as well. An input from stakeholders, like medical journal editors and their representative association, Pakistan Association of Medical Journal Editors (PAME), is therefore important.

Decision made in isolation and with people not in the field of medical journalism increases conflict rather than facilitating research in Pakistan.

REFERENCES

- Kaldas M, Michael S, Hanna J, Yousef GM. Journal impact factor: A bumpy ride in an open space. J Investig Med 2020; 68(1):83-7. doi: 10.1136/jim-2019-001009.

- Shanbhag VK. J Impact Fac Biomed J 2016; 39(3):225. doi: 10.1016/j.bj.2015.12.001.

- Garfield E. The evolution of the Science Citation Index. Int Microbiol 2007; 10(1):65-9.

- Bornmann L, Marx W, Gasparyan AY, Kitas GD. Diversity, value and limitations of the journal impact factor and alternative metrics. Rheumatol Int 2012; 32(7):1861-7. doi: 10.1007/s00296-011-2276-1.

- Baker DW. Introducing citescore, our journal's preferred citation index: Moving beyond the impact factor. Jt Comm J Qual Patient Saf 2020; 46(6):309-10. doi: 10.1016/j.jcjq. 2020.03.005.

- Gasparyan AY, Nurmashev B, Yessirkepov M, Udovik EE, Baryshnikov AA, Kitas GD. The journal impact factor: Moving toward an alternative and combined scientometric approach. J Korean Med Sci 2017; 32(2):173-9. doi: 10. 3346/jkms.2017.32.2.173.

- HJRS HEC journal recognition system. Available at: https://hjrs.hec.gov.pk

- Bollen J, Van de Sompel H, Hagberg A, Chute R. A principal component analysis of 39 scientific impact measures. PLoS One 2009; 4(6):e6022. doi: 10.1371/journal.pone.0006022.